The proposed method is capable of generating a sign language video, given a written or spoken language sentence. To advance the field of SLP, we propose a new approach, harnessing methods from NMT, computer graphics, and neural network based image/video generation. This results in at best crude, and at worst incorrect translations, and results in the indicative ‘robotic’ motion seen in many avatar based approaches. Additionally, by treating sign language as a concatenation of isolated glosses, any context and meaning conveyed by non-manual features is lost. Translating a spoken sentence into sign glosses is a non-trivial task, as the ordering and number of glosses does not match the words of the spoken language sentence (see Fig. However, there are several problems with this method. Another method relies on translating the spoken language into sign glosses, Footnote 1 and connecting each entity to a parametric representation, such as the hand shape and motion needed to animate the avatar. When driven using motion capture data, avatars can produce life-like signing, however this approach is limited to pre-recorded phrases, and the production of motion capture data is costly. The problem of SLP is generally tackled using animated avatars, such as Cox et al. facial expressions, mouthings, body posture) features. upper body motion, hand shape and trajectory) and non-manual (i.e.

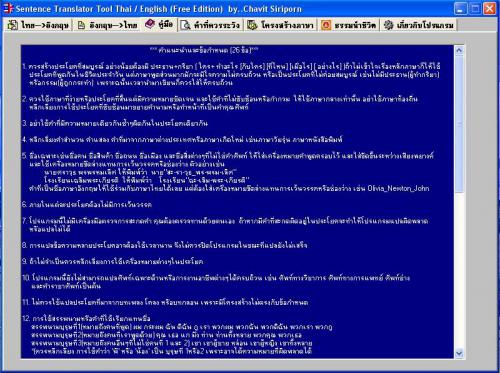

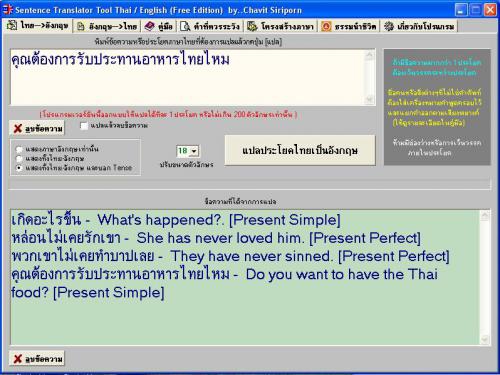

Asl sentence translator manual#

These channels include both the manual (i.e. Unlike spoken languages, sign languages employ multiple asynchronous channels (referred to as articulators in linguistics) to convey information. Furthermore, generating sign language from spoken language is a complicated task that cannot be accomplished with a simple one-to-one mapping. However, there is no guarantee that someone who’s first language is, for example, British Sign Language, is familiar with written English, as the two are completely separate languages. This is due to the misconception that deaf people are comfortable with reading spoken language and therefore do not require translation into sign language. It requires machine translation methods to find a mapping between a spoken and signed language, that takes into account both their language models.Ĭommercial applications for sign language primarily focus on SLR, by mapping sign to spoken language, typically providing a text transcription of the sequence of signs, such as Elwazer ( 2018), and Robotka ( 2018). 1 which demonstrates that both the tokenization of the languages and their ordering is different). This makes the task of translating between spoken and signed languages a complex problem, as it is not simply an exercise of mapping text to gestures word-by-word (see Fig. Like spoken languages, sign languages have their own grammatical rules and linguistic structures. Whilst not all these people rely on sign languages as their primary form of communication, they are widely used, with an estimated 151,000 users of British Sign Language (BDA: British Deaf Association 2019) in the United Kingdom, and approximately 500,000 people primarily communicating in sign languages across the European Union (EU: European Parliament 2018). We further demonstrate the video generation capabilities of our approach for both multi-signer and high-definition settings qualitatively and quantitatively using broadcast quality assessment metrics.Īccording to the World Health Organization there are around 466 million people in the world that are deaf or suffer from disabling hearing loss (WHO: World Health Organization 2018). We set a baseline for text-to-gloss translation, reporting a BLEU-4 score of 16.34/15.26 on dev/test sets. We evaluate the translation abilities of our approach on the PHOENIX14 T Sign Language Translation dataset.

This is the first approach to continuous sign video generation that does not use a classical graphical avatar. The resulting pose information is then used to condition a generative model that produces photo realistic sign language video sequences.

We first translate spoken language sentences into sign pose sequences by combining an NMT network with a Motion Graph. We achieve this by breaking down the task into dedicated sub-processes. Contrary to current approaches that are dependent on heavily annotated data, our approach requires minimal gloss and skeletal level annotations for training.

Our system is capable of producing sign videos from spoken language sentences. We present a novel approach to automatic Sign Language Production using recent developments in Neural Machine Translation (NMT), Generative Adversarial Networks, and motion generation.

0 kommentar(er)

0 kommentar(er)